What I Learned When a Client Wanted to Use ChatGPT

Recently, I heard the words no writer wants to hear from a client: “Let’s try using ChatGPT!”

The client wanted to try ChatGPT out on several SEO-driven ideas we put on the editorial calendar. These articles were designed to address a topic the client didn’t have a lot of internal data around yet and to lighten the load while my main contact was on maternity leave. With the due dates still months away, I hadn’t put much thought into them. Then I got the dreaded Slack message.

My client had already run one of the topics through ChatGPT — sort of (we’ll get to that later) — and wanted to use the results to outline the pieces, potentially saving time on the workflow. The existing blog workflow generally ran smoothly, so I was a tad confused. Still, working with ChatGPT and other generative AI tools is almost certainly part of every content marketer’s future, so I decided to play along. Here are the lessons I learned.

One query isn’t enough

My client had already asked ChatGPT a question related to our topic, then copied and pasted the results into a document. It was a broad query, and the results certainly were not enough for a full blog post. The response was a couple of hundred words long, extremely basic, and a bit repetitive.

Frankly, it was right on par with a lot of bad SEO writing I’ve seen — both in published articles and from clients asking me to edit the work of SEO writers who didn’t quite have the writing part down. It answered the very specific question but didn’t offer much more in the way of insight, actionable takeaways, or even evidence to back up its claims.

In order to get a full-length blog post that covered the necessary details, I would have had to ask ChatGPT multiple questions, each carefully crafted to get the answers I needed for the topic. I’d have to form a detailed outline (which just isn’t how I work) and then figure out which questions would get me the content I need. That’s certainly doable, but not really efficient — especially if you’re a relatively fast writer.

Speaking of efficiency…

You have to do additional research

Whether it’s tracking down stats and research or turning to a subject matter expert for a quote, I always look for proof and expertise to support the points I make — even if they seem relatively self-evident. ChatGPT doesn’t seem to be concerned with sources.

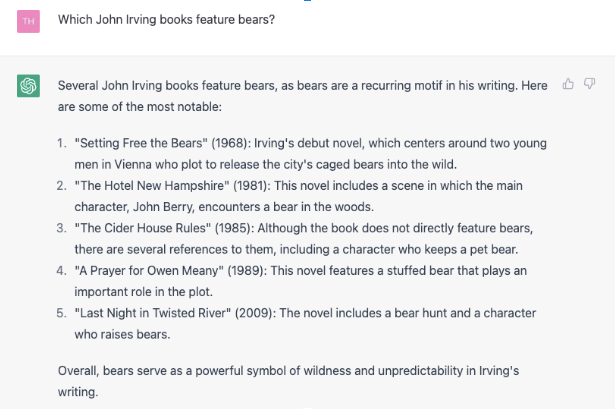

You can ask ChatGPT to provide references to support what it says, but the results are hit or miss. If you just ask it a question, you’ll get a synthesized version of pretty basic information. Ask it to do much more than that, and you definitely need to start fact-checking the answers. For instance, I asked ChatGPT a fairly basic question about one of my favorite authors, and it got the answer wrong in several ways.

There are a few things wrong with this answer, but here are the highlights: “A Prayer for Owen Meany” does not have a stuffed bear (it’s an armadillo); there are no pet bears in “The Cider House Rules,” but there is one in “The Hotel New Hampshire” along with a woman who dresses up as a bear (there is no encounter in the woods); no one in “Last Night at Twisted River” raises bears, but the main character does accidentally kill someone whom he mistakes for a bear.

All bear-related digressions aside, it’s clear to me that any factual claims made by ChatGPT must be checked. It’s only as good as the information it has access to, and we all know that information on the internet is suspect at best. So you’ll likely need to spend just as much — if not more — time researching and fact-checking ChatGPT as you would have if you just did the research yourself.

Editing AI requires a total rewrite

Even if ChatGPT were able to put writers out of business, editors would still have plenty of work to do.

I set out to write the article in question by incorporating as much of the ChatGPT response as I could — at least for the first draft. I thought I might be able to use most of the three-paragraph response in one section but ended up just pulling a very basic sentence or two — and then editing it drastically. Even if I had used the AI-generated text in its entirety, I would have had to add things like subheads (to make it more reader- and SEO-friendly) and all the links and stats I dug up during my research.

Practicalities aside, ChatGPT also doesn’t have much personality. Matching a brand’s voice, or even just trying to interject a bit of humor, will take the hand of an editor. Given that Google still says it prioritizes high-quality content regardless of how it's created, you will still need a skilled editor to make sure AI content is more than just a regurgitation of what’s already available (and that it’s accurate). Without the intervention of a human, you also run the risk of posting the same AI-generated content as competitors who had the same idea as your content team.

The verdict on working with ChatGPT

Up until this particular situation, most clients (and potential clients) had only asked about ChatGPT in terms of ensuring our team didn’t rely on it. So I hadn’t spent a lot of time thinking about it, even as my LinkedIn feed filled up with hot takes on the AI craze. As the tools mature, I’m sure we will find new ways to incorporate generative AI into our workflows. However, at present, it doesn’t look like AI will save content marketers much time — though if you’re strapped for resources and have a skilled editor, it may come in handy.